Hearing the sound of silence

AI-based sign language learning cultivates a more inclusive world

Nearly one in every 1,000 babies worldwide were born with a hearing impairment. Sign language, which is way more than mere gestures among deaf communicators, has its own language system and culture. Hearing people who live and deal with this population also need to learn sign language so as to be able to communicate with them.

Hearing people often find it difficult to master the grammatical nuances of sign language, including hand and finger coordination, facial expressions, head positions, and mouth shapes. Get one element wrong, and the message will often be misunderstood. Some people may think that the deaf would be able to cope perfectly well with written communication or text messaging. Professor Felix Sze, Deputy Director of CUHK’s Centre for Sign Linguistics and Deaf Studies (CSLDS), begs to differ.

She thinks that written communication poses a huge challenge to the deaf. ‘The Chinese sentence “I have no book” becomes “I book no have” in sign language. To require the deaf who are no native readers and writers of Chinese to follow Chinese grammar is like asking a Chinese-speaking person to express herself in Chinese, while following English syntax.’ Hence, an understanding of the communication needs of the deaf becomes a prerequisite for a truly inclusive society.

Immersive game for sign language learners

In collaboration with Google, the Nippon Foundation and Kwansei Gakuin University in Japan, CUHK has launched the first multi-language online sign language game, SignTown which integrates AI and sign linguistic theory. It allows players to learn sign languages in Hong Kong and Japan in an engaging online environment by harnessing machine learning that recognises 3D sign language movements.

Watch the video to learn more about SignTown

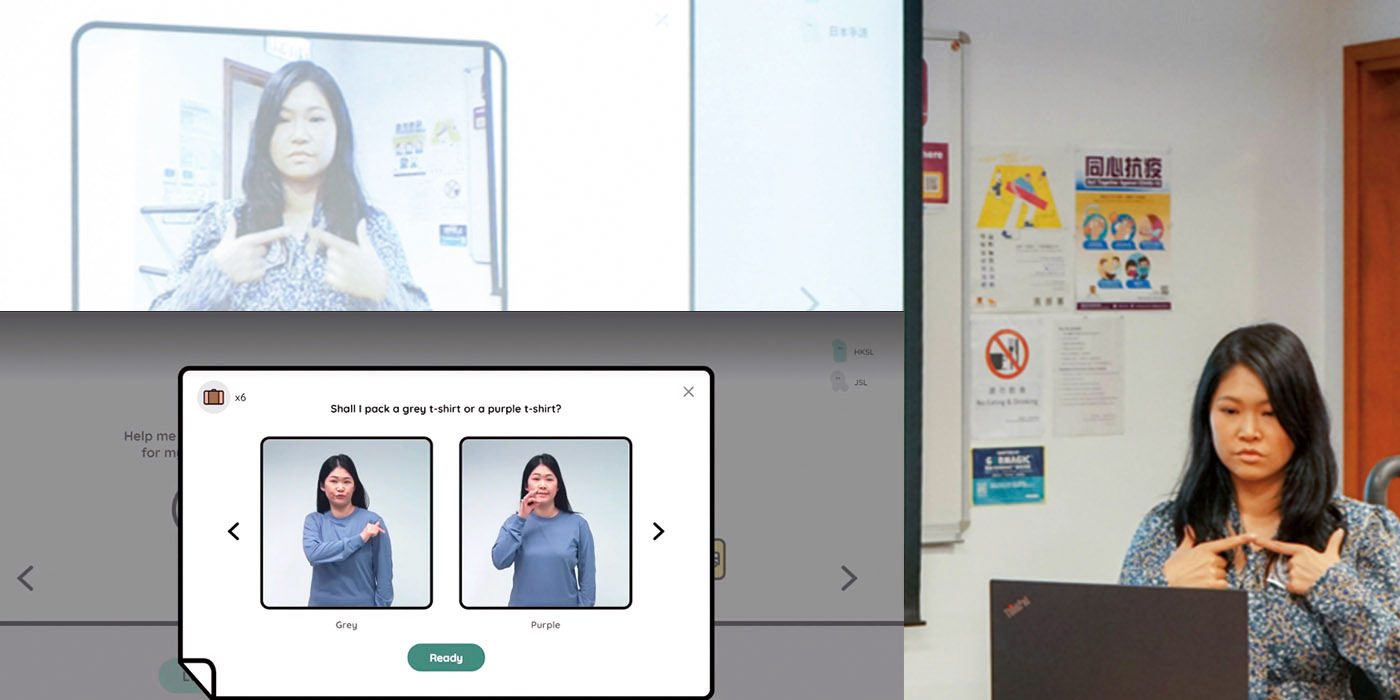

The game puts players in a virtual town whose official language is sign language. They are asked to make signs facing their computer cameras to complete tasks, including packing for a trip, finding a hotel, and ordering food in a café. When players see a split screen showing two signs in the activity, they need to pick one and imitate it in front of the camera. The AI-powered recognition model provides immediate feedback on signing accuracy. Cute hand-shaped cartoon characters appear from time to time, to explain to players the definitions and concepts of sign language, deaf people and deaf culture.

An initiative of Project Shuwa, SignTown has been made possible by international cross-disciplinary collaboration between CUHK, Google and Japanese counterparts, with the aim of bridging the gap between the deaf and hearing worlds with advanced technology. ‘Shuwa’ (‘手話’ in Japanese characters) means ‘sign language’. The game was launched on 23 September 2021, the International Day of Sign Language.

AI-based movement recognition

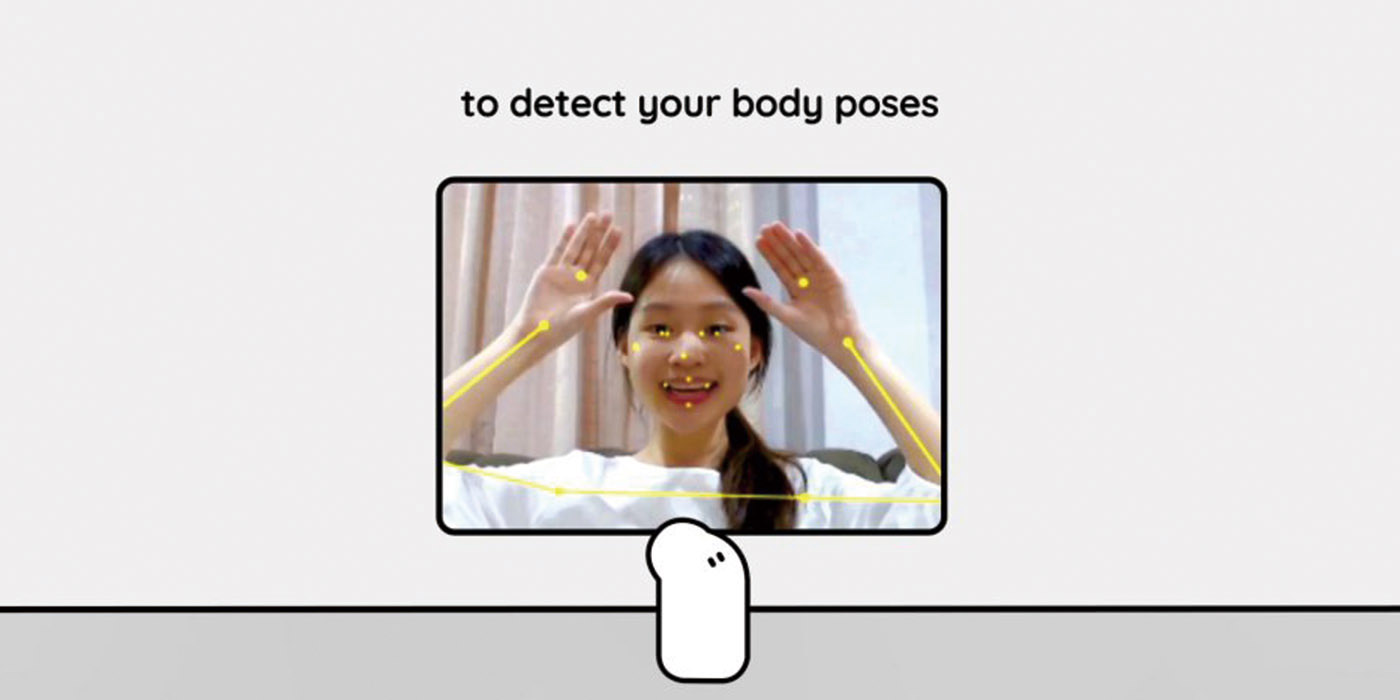

Previous sign language recognition models were not accurate enough because they struggled to analyse visual-gestural language data. Sign language deals with a range of gestural information, including hand and body movements, facial expressions and mouth shapes. Signing without these subtleties could result in ungrammatical or uninterpretable messages.

While sign languages vary from one country to another, phonetic features such as handshapes, orientations and movements are universal, and the number of possible combinations is finite. Therefore, recognition models are possible. Recognising sign language, including hand and body movements, requires advanced equipment such as special 3D cameras and gloves with sensors, making it hard to popularise the technology.

Project Shuwa has acknowledged these pain points by constructing the first machine learning model that uses a computer camera to recognise, track and analyse 3D hand and body movements as well as facial expressions. Currently, players can choose between Japanese and Hong Kong sign languages. More options will be available in the future.

The role of CSLDS in Project Shuwa is to provide sign linguistic knowledge and features of Hong Kong sign language to develop the machine learning model. ‘As sign linguists, we want to help our collaborators to develop a sign language recognition tool which is smart enough to tell whether the players are making accurate signs,’ said Professor Sze.

The future goal of the project is to build a sign dictionary that not only incorporates a search function, but also provides a virtual platform to facilitate sign language learning and documentation based on AI. The project team aspires to develop an automatic translation model that can recognise natural conversations in sign language and convert them into spoken language using the cameras of commonly used computers and smartphones.

Professor Katsuhisa Matsuoka, Director of the Centre for Sign Language Studies at Kwansei Gakuin University, said, ‘It is a great pleasure for our centre to be involved in the technical development of Project Shuwa. We hope in the future to be able to develop expert versions of learning tools for medical and legal professionals. These are all worth exploring.’

‘Our long-term goal is to create a barrier-free society that is truly inclusive for deaf signers or people who may benefit from signing communications. There is still a long way to go, but SignTown has opened up a lot more opportunities for people around the world to learn and know more about sign language in real life situations,’ said Professor Sze. The team plans to incorporate more signs in SignTown, so that people around the world may learn more sign languages and be able to distinguish between them. With the help of the latest technology, they are ready to help realise the vision of an inclusive and harmonious society which offers equal opportunities for each of its members.

By Jenny Lau

This article has appeared in Sustainable Development Matters 2022.