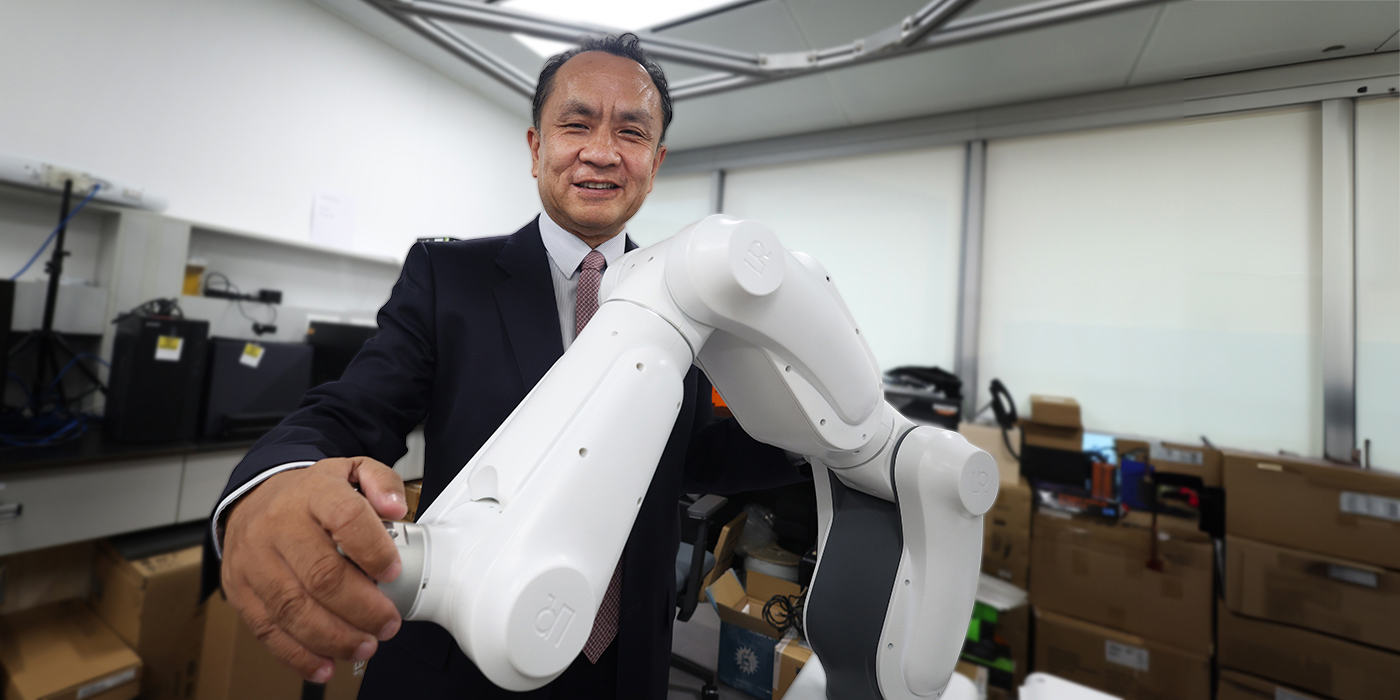

Making robots ever smarter

Liu Yun-hui drives robots with 3D-vision

This is part six of our series in CUHK In Focus where we talk to principal investigators of the seven CUHK projects chosen for the Hong Kong government’s Research, Academic and Industry Sectors One-plus (RAISe+) Scheme. It provides up to HK$100 million to each approved project to help local universities commercialise their research outcomes.

Professor Liu Yun-hui has been devoted to building robots his whole career. His innovations in industrial robots have marked milestones in robotics at CUHK, most notably the vision-based autonomous forklift his team developed in 2016, which has been deployed by warehouses around the world and helped his startup grow into a unicorn.

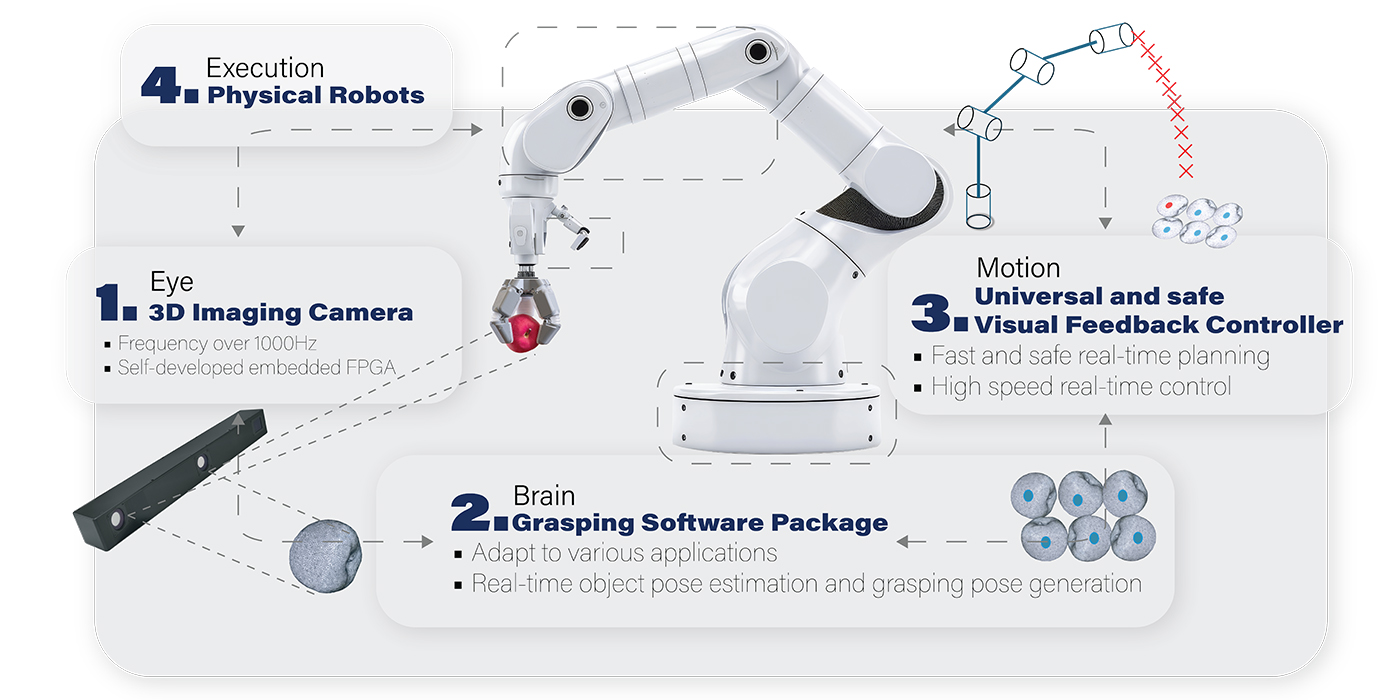

In recent years, Professor Liu’s team has focused on developing innovative products and applications for 3D vision-driven robots in various domains, including smart factories, smart warehouses and smart cities, aiming to make them smart enough to operate not just in industrial contexts, but also in the service industry, where environments are more dynamic, sometimes with humans around. These 3D vision-driven robots should have good eye-brain-motion coordination like humans, responsive to changes, he says.

“An ordinary robot does not have eyes. You need to teach it how to walk, how to pick up an object and then come back – it keeps repeating these steps. It does not have human intelligence,” Professor Liu explains. “In some industrial settings and in service industries, there are people around and the environment is full of uncertainties. The tasks required are not so repetitive. This gives rise to safety problems.”

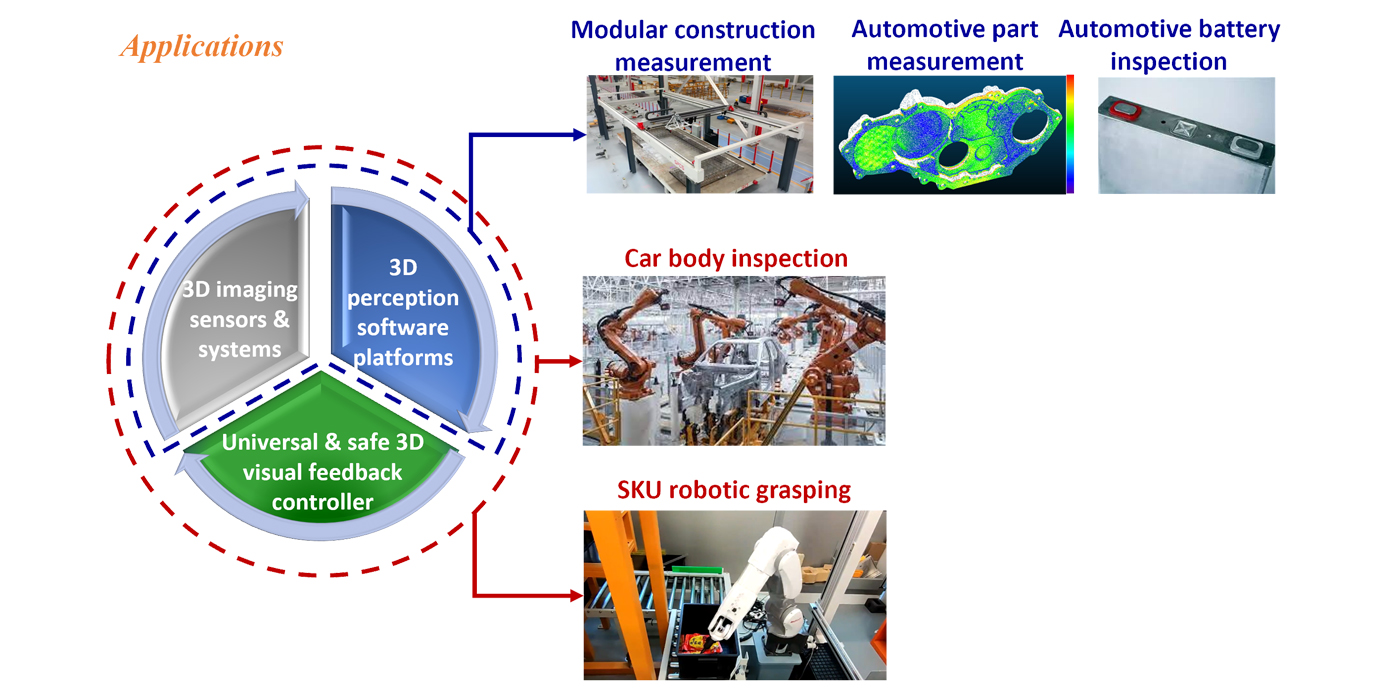

Now, with funding from the government’s inaugural Research, Academic and Industry Sectors One-plus (RAISe+) Scheme, Professor Liu’s team aims to develop and commercialise technologies and products involving 3D vision-driven robots that realise effective real-time eye-brain-motion coordination for more versatile, safer operations. The robots will be useful in modular construction for measuring modular parts, and in the automotive industry for automated measurement of parts and batteries, as well as for car inspection. The robots could also be deployed in robotic grasping in warehouses or other settings.

The team comprises multidisciplinary experts from CUHK’s Department of Mechanical and Automation Engineering, of which Professor Liu is a member, and the Department of Computer Science and Engineering, including Professors Fu Chi-wing and Dou Qi. The funding will give them a boost in transferring the years of knowledge they have built up into real-world applications for Hong Kong and beyond, Professor Liu says.

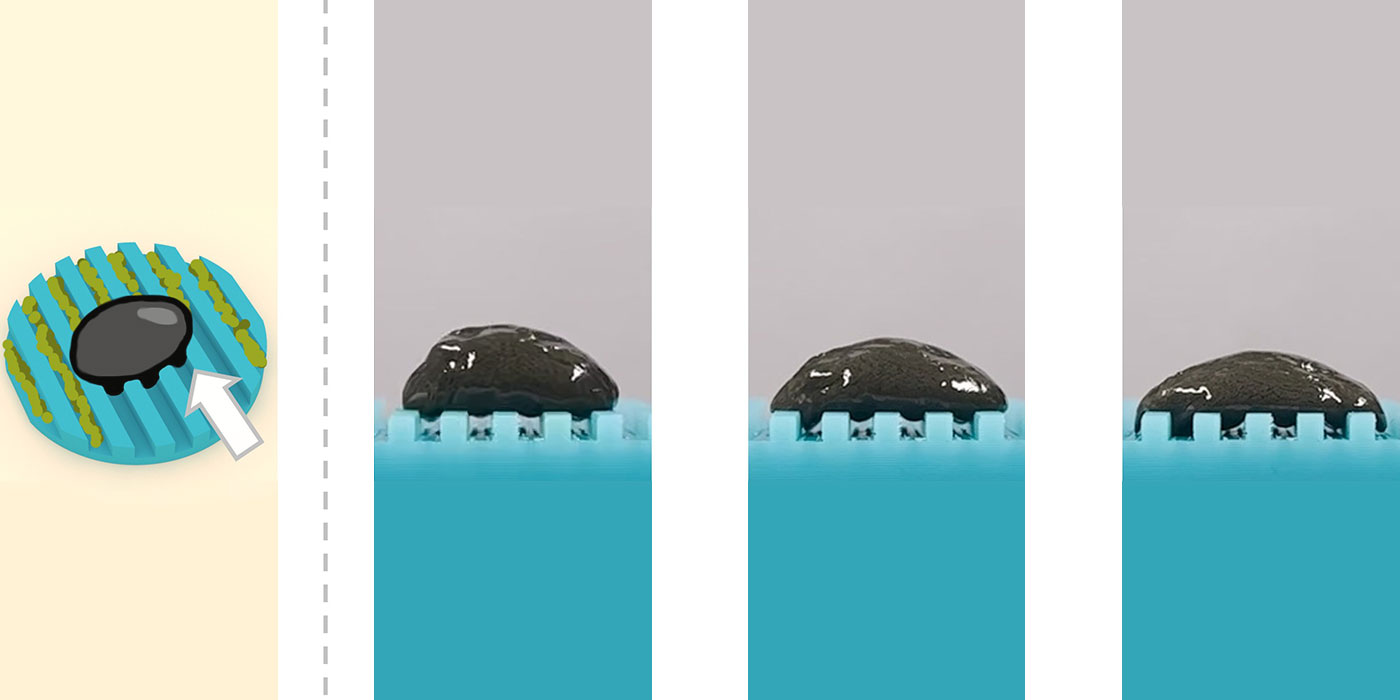

Existing 3D vision robots have slow visual feedback, making it challenging to perform operations safely, quickly and with high adaptability, he explains. For industrial or service robots to accomplish complex tasks, they need to achieve eye-brain-motion coordination similar to humans. Current products have a frequency of only 0.5-2Hz. The team’s goal is to develop 3D vision-driven robots with coordination frequencies closer to humans’, reaching 200-1kHz.

“Primarily, we want to improve the artificial intelligence here. This way, robots can perform tasks like sorting and shelf stocking in logistics setting and retail stores,” Professor Liu notes. “For instance, while we already see robots serving meals in restaurants, most of them can only roll back and forth, and do no more, still lacking the versatility needed for more dynamic and complex operations. I hope they could adapt to more intricate tasks, like helping clean up tables or taking up simple tasks in the kitchen in the future, with high versatility.”

Professor Dou, who has been developing computer algorithms and accumulating substantial experience in robot vision research, explains that traditional robotic vision is mainly semantic, which means a robot is capable of interpreting visual data in a way that assigns meaningful labels to identify objects and scenes – “an apple” or “a water bottle”, for example. The team is aiming to give these abilities a major boost.

“In the current project, we are also getting the robot to estimate accurately the pose of the object in front of it and to calculate the relative positions of the object and the robotic arm in three-dimensional space, so that the robot can plan the motion of its arm to grasp any randomly placed target objects from suitable angles. This way, robotic arms will providing greater flexibility than before.”

Artificial intelligence models currently on the market mostly focus on collecting and organising various corpora and feeding them into neural network inference models for the computer to learn, she notes. They primarily rely on language model-based machine learning techniques to effectively accomplish natural language processing.

“However, these AI systems are generally believed to have limited understanding of the physical world. For instance, when we need a robot to grasp an object, where should it place the object afterwards? We need to help the robot develop a thorough understanding of the real world, collecting data from warehouses and actual scenarios in the service industry in order to build and adapt large AI models.”

In preparing for the RAISe+ application, Professor Liu says everything has built on the team’s collective efforts over the years.

“You need to accumulate a lot of experience and knowledge over a long period of time and then identify suitable applications. It’s equally important to develop what we call ‘deep tech’, where your technology is competitive and innovative,” Professor Liu remarks. “Lastly, bring in industry partners.”

The project team will closely collaborate with some of these partners to drive the commercialisation of three-dimensional, vision-driven robots in various fields. Companies like China Resources and Wuling Motors are on board to provide different service, industrial and construction scenarios for the team to explore.

By Joyce Ng

Photos by D. Lee

- Samuel Au powers the future of healthcare with cutting-edge technology

- Barbara Chan endeavours to use tissue engineering to heal patients

- Lam Hon-ming pioneers sustainable agriculture through soybean research

- Liu Yun-hui drives robots with 3D-vision

- Tsang Hon-ki on moving from lab to market

- Raymond Yeung innovates in network technology for a smarter future

- Engineer Zhao Ni’s journey towards wearable medical devices for all